SQL Server 2014 CTP1 has been out for a few weeks now, and you’ve likely seen quite a bit of press about memory-optimized tables and updateable columnstore indexes. While these are certainly worthy of attention, in this post I wanted to explore the new SELECT … INTO parallelism improvement. The improvement is one of those ready-to-wear changes that, from the looks of it, will not require significant code changes in order to start benefiting from it. My explorations were performed using version Microsoft SQL Server 2014 (CTP1) – 11.0.9120.5 (X64), Enterprise Evaluation Edition.

Parallel SELECT … INTO

SQL Server 2014 introduces parallel-enabled SELECT ... INTO for databases and to test this feature I used the AdventureWorksDW2012 database and a version of the FactInternetSales table that had 61,847,552 rows in it (I was responsible for adding those rows; they don’t come with the database by default).

Because this feature, as of CTP1, requires database compatibility level 110, for testing purposes I set the database to compatibility level 100 and executed the following query for my first test:

SELECT [ProductKey],

[OrderDateKey],

[DueDateKey],

[ShipDateKey],

[CustomerKey],

[PromotionKey],

[CurrencyKey],

[SalesTerritoryKey],

[SalesOrderNumber],

[SalesOrderLineNumber],

[RevisionNumber],

[OrderQuantity],

[UnitPrice],

[ExtendedAmount],

[UnitPriceDiscountPct],

[DiscountAmount],

[ProductStandardCost],

[TotalProductCost],

[SalesAmount],

[TaxAmt],

[Freight],

[CarrierTrackingNumber],

[CustomerPONumber],

[OrderDate],

[DueDate],

[ShipDate]

INTO dbo.FactInternetSales_V2

FROM dbo.FactInternetSales;

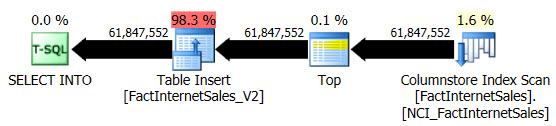

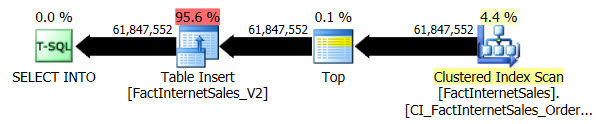

The query execution duration was 3 minutes and 19 seconds on my test VM and the actual query execution plan produced was as follows:

SQL Server used a serial plan, as I expected. Notice also that my table had a nonclustered columnstore index on it which was scanned (I created this nonclustered columnstore index for use with other tests, but I’ll show you the clustered columnstore index query execution plan later as well). The plan did not use parallelism and the Columnstore Index Scan used row execution mode instead of batch execution mode.

So next, I modified the database compatibility level (and note that there isn’t a SQL Server 2014 compatibility level in CTP1 yet):

USE [master];

GO

ALTER DATABASE [AdventureWorksDW2012] SET COMPATIBILITY_LEVEL = 110;

GO

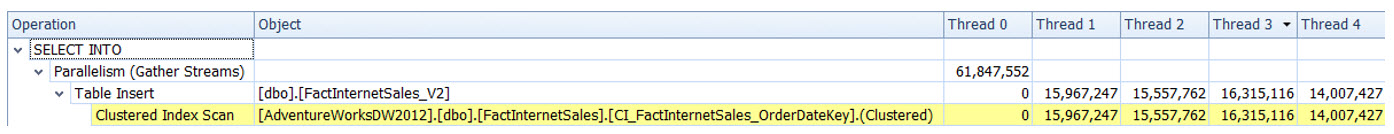

I dropped the FactInternetSales_V2 table and then re-executed my original SELECT ... INTO operation. This time the query execution duration was 1 minute and 7 seconds and the actual query execution plan was as follows:

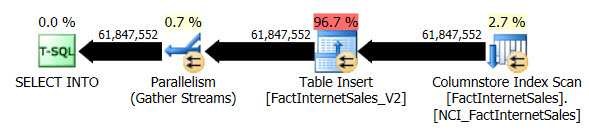

We now have a parallel plan and the only change I had to make was to the database compatibility level for AdventureWorksDW2012. My test VM has four vCPUs allocated to it, and the query execution plan distributed rows across four threads:

The nonclustered Columnstore Index Scan, while using parallelism, didn’t use batch execution mode. Instead it used row execution mode.

Here is a table to show the test results so far:

| Scan Type | Compatibility level | Parallel SELECT … INTO | Execution Mode | Duration |

|---|---|---|---|---|

| Nonclustered Columnstore Index Scan | 100 | No | Row | 3:19 |

| Nonclustered Columnstore Index Scan | 110 | Yes | Row | 1:07 |

So as a next test, I dropped the nonclustered columnstore index and re-executed the SELECT ... INTO query using both the database compatibility level 100 and 110.

The compatibility level 100 test took 5 minutes and 44 seconds to run, and the following plan was generated:

The serial Clustered Index Scan took 2 minutes and 25 seconds longer than the serial nonclustered Columnstore Index Scan.

Using compatibility level 110, the query took 1 minute and 55 seconds to run, and the following plan was generated:

Similar to the parallel nonclustered Columnstore Index Scan test, the parallel Clustered Index Scan distributed rows across four threads:

The following table summarizes these two aforementioned tests:

| Scan Type | Compatibility level | Parallel SELECT … INTO | Execution Mode | Duration |

|---|---|---|---|---|

| Clustered Index Scan | 100 | No | Row (N/A) | 5:44 |

| Clustered Index Scan | 110 | Yes | Row (N/A) | 1:55 |

So then I wondered about the performance for a clustered columnstore index (new in SQL Server 2014), so I dropped the existing indexes and created a clustered columnstore index on the FactInternetSales table. I also had to drop the eight different foreign key constraints defined on the table before I could create the clustered columnstore index.

The discussion becomes somewhat academic, since I'm comparing SELECT ... INTO performance at database compatibility levels that didn’t offer clustered columnstore indexes in the first place – nor did the earlier tests for nonclustered columnstore indexes at database compatibility level 100 – and yet it is interesting to see and compare the overall performance characteristics.

CREATE CLUSTERED COLUMNSTORE INDEX [CCSI_FactInternetSales]

ON [dbo].[FactInternetSales]

WITH (DROP_EXISTING = OFF);

GO

As an aside, the operation to create the clustered columnstore index on a 61,847,552 million row table took 11 minutes and 25 seconds with four available vCPUs (of which the operation leveraged them all), 4GB of RAM and virtual guest storage on OCZ Vertex SSDs. During that time the CPUs weren’t pegged the entire time, but rather displayed peaks and valleys (a sampling of 60 seconds of CPU activity shown below):

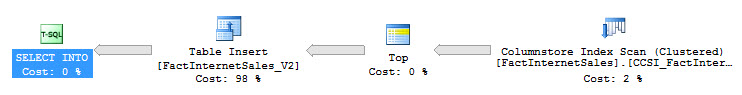

After the clustered columnstore index was created, I re-executed the two SELECT ... INTO tests. The compatibility level 100 test took 3 minutes and 22 seconds to run, and the plan was a serial one as expected (I'm showing the SQL Server Management Studio version of the plan since the clustered Columnstore Index Scan, as of SQL Server 2014 CTP1, is not yet fully recognized by Plan Explorer):

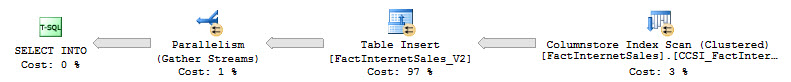

Next I changed the database compatibility level to 110 and re-ran the test, which this time the query took 1 minute and 11 seconds and had the following actual execution plan:

The plan distributed rows across four threads, and just like the nonclustered columnstore index, the execution mode of the clustered Columnstore Index Scan was row and not batch.

The following table summarizes all the tests within this post (in order of duration, low to high):

| Scan Type | Compatibility level | Parallel SELECT … INTO | Execution Mode | Duration |

|---|---|---|---|---|

| Nonclustered Columnstore Index Scan | 110 | Yes | Row | 1:07 |

| Clustered Columnstore Index Scan | 110 | Yes | Row | 1:11 |

| Clustered Index Scan | 110 | Yes | Row (N/A) | 1:55 |

| Nonclustered Columnstore Index Scan | 100 | No | Row | 3:19 |

| Clustered Columnstore Index Scan | 100 | No | Row | 3:22 |

| Clustered Index Scan | 100 | No | Row (N/A) | 5:44 |

A few observations:

- I'm not sure if the difference between a parallel

SELECT ... INTOoperation against a nonclustered columnstore index versus clustered columnstore index is statistically significant. I'd need to do more tests, but I think I would wait to perform those until RTM. - I can safely say that the parallel

SELECT ... INTOdid significantly outperform the serial equivalents across a clustered index, nonclustered columnstore and clustered columnstore index tests.

It is worth mentioning that these results are for a CTP version of the product, and my tests should be viewed as something that could shift in behavior by RTM – so I was less interested in the standalone durations versus how those durations compared between serial and parallel conditions.

Some performance features require significant refactoring – but for the SELECT ... INTO improvement, all I had to do was bump up the database compatibility level in order to start seeing the benefits, which is definitely something I appreciate.

Great stuff, Joe ! :)

I keep wondering why did the Batch Mode does not kick-in, maybe because the optimizer realizes that the bottleneck would be the insert anyway … But from the other side we can do data reading from a slower filegroup and inserting into a much faster one …

Also I keep on noticing quite a constant delay difference in execution times between Clustered and Nonclustered Columnstore Indexes, with better times going for Nonclustered Columnstore Indexes, wonder if its a time spent scanning potential Delta Stores plus Tuple Mover synchronization …

Thank you Niko!

Regarding your comment on the delay – I'll be interested to see if this changes over the CTPs (hopefully?) I haven't been testing a comparison specifically, but now based on your comment, I'll be paying attention.

I wish this feature was more general and would work with other bulk DML, too. INSERT…SELECT being an obvious candidate because you can choose the table structure with that. Heaps are not the most interesting storage scheme…

Gr8 work Joe, keep it up ..! ,Hope this feature rocks for MSBI execute sql task for creating Staging tables.

I'm with you, tobi…I have an ETL process that creates the table first so that there's not a schema lock during the load, but by doing that, my INSERT INTO takes on the order of 15 times as long than SELECT INTO does. Kind of a waste of this giant server hardware to not make use of it during OTHER bulk DML.